The main problem with NADE is that it is extremely slow to train and sample. When training on a MNIST digit, we need to compute log probability one pixel sequentially. Same goes for sampling a MNIST digit.

MADE – Mask Autoencoder [1] proposes a clever solution to speed up an autoregressive model. The key insight is that an autoregressive model is a special case of an autoencoder. By removing a weight carefully, one can convert an autoencoder to an autoregressive model. The weight removal is done through mask operations.

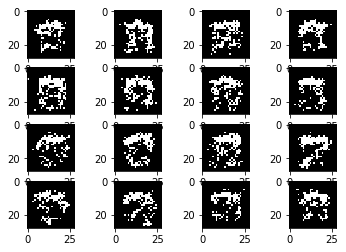

I used the implementation from [2] and trained MADE with a single layer of 500 hidden units on a binary MNIST dataset. I sample each image by first generating a random binary image, feed it to the MADE, then sample the first pixel. Then, I update the first-pixel value of the random binary vector. I then pass this random vector to the MADE again and so on.

Here are sampled images:

They look pretty bad! I barely notice a digit 7. This makes me wondered if a single layer MADE is not strong enough or the way I sample an image is not correct.

The strength of MADE is that training is very fast. This contribution alone makes it possible to train a large autoregressive model. I really like this paper.

References:

[1] https://arxiv.org/pdf/1502.03509v2.pdf

[2] https://github.com/karpathy/pytorch-made

You must be logged in to post a comment.