Since my internship will be over in a few days, I took a challenge from Siraj Ravel for 100MLCodingChallenge and plan to write a blog and implement on whatever topics or papers that I like. This will be fun.

For the first day, I will tackle GANs. I know about GANs for a few years but never get a chance to implement it. I am a huge fan of variational autoencoder so I was a bit bias toward a probabilistic model. But the main drawback of VAE is its dependency on the reconstruction error, which may not be a good loss criterion for some interesting problems.

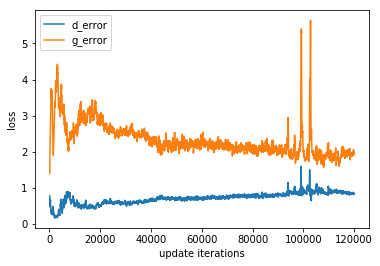

I implemented a simple GANs based on a simple feedforward neural network. According to the loss plot, it seems to me that my implementation of GANs working. The loss of discriminator slowly increases while the loss from the generator slowly decreases overtimes.

I also sampled 16 images from the random uniform noise. We can see that the image quality is getting better and better overtimes as well.

10 epochs

50 epochs

100 epochs

150 epochs

200 epochs

That is it for my first day of the challenge. It will be a fun journal in the coming month.

You must be logged in to post a comment.